As part of the project I wanted to use the networking features of the Raspberry Pi Zero W to enable it to stream internet radio as well as the possibility of playing mp3 files from a network share.

The Zero is only a single core CPU as opposed to the Pi 3 which is quad core, also the memory is 512MB not the 1GB of the Pi 3. This means the computational resources are less but still capable.

As I will be using various display boards and controllers attached via the GPIO header I didn't need the full Pixel desktop graphics. This mean't the board would be configured to be a headless node with SSH access.

After creating a SD card with the operating system to which their are plenty guides I updated the the OS to ensure I was using the latest version.

sudo apt-get update

sudo apt-get upgrade

I also configured Pi to boot into command line and enabled SSH, not forgetting to change the default password. Finally I enabled the I2C interface which is required for the MicroDot Phat, OLED display and the RTC I am going to use.

Configuring the HATs and hardware

There are a number of HATS (or Bonnets) that I'm using along with some additional hardware, these require some libraries to get them working. There are plenty of examples vendors websites and on the Internet, however some pointers.

Real time clock

Using the

Adafruit DS3231 Precision RTC Breakout as the RTC in this project. Installation instructions can be found on their website "

Adding a Real Time Clock to Raspberry Pi"

The main steps for configuration are

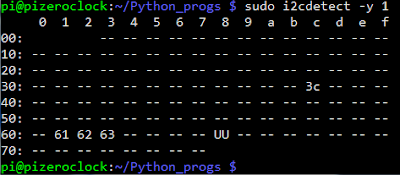

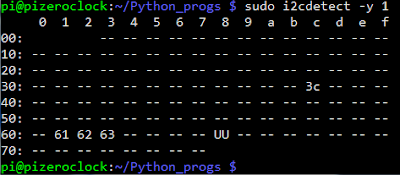

Verify Wiring (I2C scan)

sudo i2cdetect -y 1

Should show and entry for 0x68

Once you have the Kernel driver running, i2cdetect will skip over 0x68 and display UU

You can add support for the RTC by adding a device tree overlay. Run the following command

sudo nano /boot/config.txt

Add dtoverlay=i2c-rtc,ds3231 to the end of the file/ Save it and run sudo reboot to start again.

Log in and run sudo i2cdetect -y 1 to see the UU show up where 0x68 would of been previously

|

| i2cdetect results |

Micro Dot pHAT

There is an excellent getting started tutorial for the Micro Dot pHAT tutorial on the Pimoroni website (

https://learn.pimoroni.com/tutorial/sandyj/getting-started-with-micro-dot-phat)

The software library is available at

https://github.com/pimoroni/microdot-phat however they provided an excellent install script. Open a new terminal, and type the following, making sure to type 'y' or 'n' when prompted:

curl https://get.pimoroni.com/microdotphat | bash

Stereo Amplifier Bonnet

Adafruit provide an excellent range of support material and there is a Adafruit tutorial for the Stereo bonnet (

https://learn.adafruit.com/adafruit-speaker-bonnet-for-raspberry-pi). Again there is a good install script, open a new terminal, and type the following, making sure to type 'y' or 'n' when prompted:

curl -sS https://raw.githubusercontent.com/adafruit/Raspberry-Pi-Installer-Scripts/master/i2samp.sh | bash

OLED display

The display is a more traditional process for installation and for this project as the board is less of a mainstream manufacturers device I went for Luma OLED library and as it worked I'm sticking with it for this project. Open a new terminal, and type the following

$ sudo apt-get install python-dev python-pip libfreetype6-dev libjpeg-dev

$ sudo -H pip install --upgrade pip

$ sudo apt-get purge python-pip

$ sudo -H pip install --upgrade luma.oled

$ git clone https://github.com/rm-hull/luma.examples.git

$ sudo -H pip install -e .

Sound utilities

There are a lot of choices for playing sound files and streaming audio, I use two players.

mp3 player

I went for a wll established play the MPG123 which had a number of features that made it attractive and it worked so didn't go looking for the perfect player. to install ,pg125 open a new terminal, and type the following

sudo apt-get install -y mpg123

The big problem with the sound HAT I used and the sound utilities is that is difficult to software control the volume. In order to get volume control working I had to configure a volume controller.

To do this I needed to create a new softvol device, using speakerphat as device name and Master as the control name. Using Master as sound card does not have a master volume control

To determine if name has been used for an existing control already, it shouldn't exist if you are following these instructions I check for any existing audio controls using the following.

amixer controls | grep Master

As it didn't find a control called Master it made following some of the available tutorials a bit easier. What I found I had to do to get this all worming was to create some configuration files.

create ~/.asoundrc

pcm.softvol {

type softvol

slave {

pcm "speakerphat"

}

control {

name "Master"

card 0

}

}

pcm.!default {

type plug

slave.pcm "softvol"

}

create /etc/asound.conf

pcm.!default {

type plug

slave.pcm "speakerphat"

}

ctl.!default {

type hw card 0

}

pcm.speakerphat {

type softvol

slave.pcm "plughw:0"

control.name "Master"

control.card 0

}

After which it was possible to use amixer to set the volume of the play back.

Additional software

In addition to the above software I also installed some additional components that could be helpful in later additions of the devices.

As part of the software to drive this I'm considering a web based GUI to enable easier setting of alarms and editing playlists. To that end I have installed a lightweight database and web server

I have gone for

SQLITE3, Lighttpd, and PHP5 again there are a lot of tutorials on the installation and use of this combination of software on the internet.

sudo apt-get -y install lighttpd

sudo apt-get -y install sqlite3

sudo apt-get -y install php5 php5-common php5-cgi php5-sqlite

sudo lighty-enable-mod fastcgi

sudo lighty-enable-mod fastcgi-php

sudo service lighttpd force-reload

sudo chown -R www-data:www-data /var/www

sudo chmod -R 775 /var/www

sudo usermod -a -G www-data pi

sudo reboot

To test the software I used the following files placed into /var/www/html

test.php

<?PHP

phpinfo()

?>

testdb.php

<?php

try {

// Create file "scandio_test.db" as database

$db = new PDO('sqlite:scandio_test.db');

// Throw exceptions on error

$db->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION);

$sql = <<<SQL

CREATE TABLE IF NOT EXISTS posts (

id INTEGER PRIMARY KEY,

message TEXT,

created_at INTEGER

)

SQL;

$db->exec($sql);

$data = array(

'Test '.rand(0, 10),

'Data: '.uniqid(),

'Date: '.date('d.m.Y H:i:s')

);

$sql = <<<SQL

INSERT INTO posts (message, created_at)

VALUES (:message, :created_at)

SQL;

$stmt = $db->prepare($sql);

foreach ($data as $message) {

$stmt->bindParam(':message', $message, SQLITE3_TEXT);

$stmt->bindParam(':created_at', time());

$stmt->execute();

}

$result = $db->query('SELECT * FROM posts');

foreach($result as $row) {

list($id, $message, $createdAt) = $row;

$output = "Id: $id<br/>\n";

$output .= "Message: $message<br/>\n";

$output .= "Created at: ".date('d.m.Y H:i:s', $createdAt)."<br/>\n";

echo $output;

}

$db->exec("DROP TABLE posts");

} catch(PDOException $e) {

echo $e->getMessage();

echo $e->getTraceAsString();

}

?>

Configuring Lighttpd

To configure Lighttpd to support Python as a CGI language the lighttpd.conf needs to be updated to include the cgi module. This requires the following entry into the lighttpd configuration file (/etc/lighttpd/lighttpd.conf) in the server.modules section

"mod_cgi",

At the end of the configuration file add the following

$HTTP["url"] =~ "^/cgi-bin/" {

cgi.assign = ( ".py" => "/usr/bin/python" )

}

The lighttpd daemon will need to be restarted using the following command

sudo service lighttpd force-reload

The cgi-bin directory will need creating in the root of the web server and it will need the correct permissions.

sudo mkdir cgi-bin /vat/www/html

That about wraps doing the basic configuration of the Pi Zero W and the hardware.

Previous articles

http://geraintw.blogspot.co.uk/2017/05/raspberry-pi-based-radio-alarm-clock.html